106 nonconsecutive hours ago, I started tooling around with electronic music. Before that I had no experience with song writing, or instrumental arrangement. I also had no experience with the technical side of creating music–even music made almost entirely from pre-recorded samples. Today, I feel satisfied enough with the results of this effort to share it. Notice the use of the word enough.

Let me lay a little backstory–I have a lot of very talented friends. Musicians, photographers, fine-artists, writers, directors (the guys from Dirty Wizard deserve a specific acknowledgement). They’re around me in spades and ever-so-intimidating. They also happen to be about the most encouraging group of people you’ll ever meet. Since I was a teenager, I’ve been listening in and attempting to observe my friends making music. I’ve been trying put what they’re doing in the context of a system that I could understand.

It was mostly luckless. Although I’ve always really liked the idea of creating music, my fingers just don’t work that way. And honestly, my voice, well… it’s not going make anyone put down their drink. I had expressed this to these friends, and although none of them managed to express it in a way that I could make actionable at the time, they were encouraging me to give it a try. And that sticks with me.

Recently, while spending more time than I had intended watching YouTube, I found myself learning about ‘MIDI’. It wasn’t an altogether foreign concept to me, but the last I’d heard of it, it was what they used to make the Mario Brothers theme song. Videogame music, and that’s about it. It was music made without an ‘instrument’. But that’s when the bombshell hit for me–a lot of the music I like today is created using MIDI. It had gotten a lot better when I wasn’t looking.

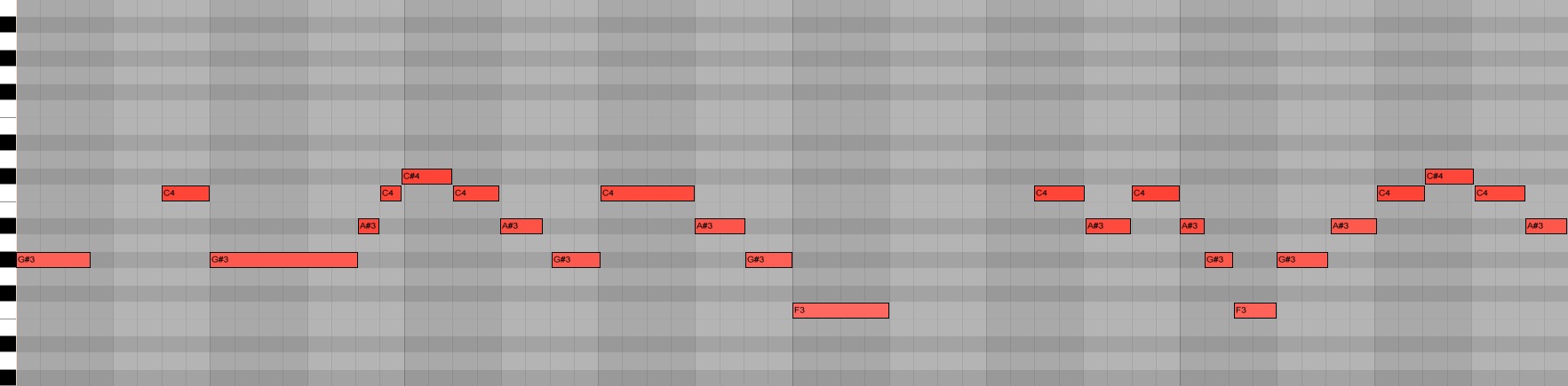

If you want to get specific, MIDI (Musical Instrument Digital Interface) is a technical standard which allows instruments, devices and computers to communicate with one another. MIDI carries ‘event signals’, such as note, pitch, and vibrato, between instruments and devices. So when you play the A Key, the computer knows you played the A key. Once a MIDI recording is made, it can be played back just like anything else. What is played back is, obviously, not a live instrument. But rather it will play a sample of, for example, a piano playing the A key.

But here’s the really magical part, once a MIDI recording is made, all of the notes and instruments can be seen on a grid. A visual medium. In fact you don’t even need an instrument. You can just draw the notes straight onto the grid if you want to, which is exactly what I did.

This is what I saw in the YouTube video that day that caught my attention. A grid of ‘music’. It suddenly occurred to me that, while there aren’t any musical instruments that I can play well enough not to embarrass myself, there is one instrument that I’m very comfortable with: the computer. And here I come to find out that this instrument can be used to make music?

That was the beginning of the Shix project (which got it’s name when I accidentally misspelled “shit” in the filename of my first song). Given the tools, could I actually create music that I myself would like listening to in the car? Could I beat the learning curve I believed existed? Could I muster enough confidence to share the endeavor with my friends? I found a lot of insights about myself, art, creativity and critique in this project.

Though I didn’t know where I was going with it, I decided from the get-go that I wanted to use my encouraging friends to pull me into the water at a time I was likely to just stick my feet in the sand. I made my interests vocal and as soon as I had created something that I thought warranted sharing with someone, I put it on my iPhone and let them hear it.

It wasn’t very good and they were disproportionately enthusiastic–in spite of hearing stuff from it’s infancy. But it was inspiring and I kept trying.

The funny thing about art–of any kind–is that it isn’t good until it’s done. Before that, and sometimes after that, it’s a steaming pile of horseshit. Embers of embarrassment that will scarcely dim in the years to come. Of course, that’s probably not true. But god, it really feels that way. What I’m trying to say is that when we are learning to create something, there’s a hump in there that is exceptionally difficult to get over. You don’t have the experience to know that it’s going to look great when it’s done, and all you see in front of you looks/sounds/feels like crap. Most of the time we give up, which is unfortunate.

In this case, as these tracks really started sounding bad, I was able to recognize the trend. And with all the positivity coming from friends, I stuck with it.

Finally I finished my first song, Uh, Oh, Optional. Which is a name I just made up because I was writing a blog. It was based on looped vocal melodies. Arrangement wise, it came together in just under 4 hours. It wasn’t a masterpiece. But I was eager to get to the technical bit of making the song sound the way I wanted it to. It was here that I started learning about compression, EQ and something called the ‘chorus’ effect, which soon became a favorite.

After the first song, which a friend commented on as being “kind of sad sounding”, I wanted to make something that sounded happy on purpose. With Something Will Be, I wasn’t strictly successful in that endeavor. But the results definitely came with a happier tone, albeit a bit accidentally, I think. This was the the first time I managed to convert vocals into MIDI notes.

Breaking at their Best was intended to be something dancy. As it turns out, ‘dancy’ is more than just a consistently thumping kick drum. And unfortunately, dancy just isn’t what I get from this one, but maybe I’ve just heard it too many times. It was the first song, however, that I managed to record all of the vocals at once. It was also the song which taught me what clipping sounds like and that simply stacking drum samples on top of each other won’t make the bass louder.

Nature Center was intended to sound a touch theatrical, and I think I did accomplish that to some degree. At the suggestion of a good friend, this was also the first time I made an attempt at blending artificial and organic sounding instruments for the finale.

Whenever I would finish with a song and think it sounded okay, I would put it on my iPhone and listen to it in the car. I’d listen to it on my headphones, shelf speakers, bluetooth speaker, iPhone speaker and any other speaker that wasn’t too disruptive to try. I’ve become quite conscious of which frequencies will and will not come out of the speakers I’m testing on. Using that information I would continue to tweak the mixes. As I learned new things about improving the final mix, I would go back to the previous songs and polish them up.

After mixing my last song, I decided it was probably time to actually put something online. So I went back through again, for one last spit-shine.

In the whole process of learning to make music, I found myself suddenly relating to things my friends have complained about. Comparisons to other music is inevitable. In fact, when starting from a clean slate, that’s a pretty major force. Eventually I started taking their advice, which came consistently in the form of a question: “Does it sound good to you?” The comparisons are not useful–except maybe for figuring out if your song is loud enough. Seriously, that’s the bitch.

I’ve had a lot of fun with this project and expect that it will become ongoing. I don’t have any intention of figuring out how to perform these live. But maybe somehow. That’s something I’ve never done before.

Recent Discussion